When users search for a query on Google, Bing, Duck Duck Go, or a number of other engines, their search is now increasingly answered first by an AI generated summary, with conventional web results shunted further down the page.

But AI often doesn’t get things right.

False information in search results

Examples of wildly false information in such summaries are everywhere. Early highlights included Google suggesting that users add glue to pizza, or eat at least one small rock a day, and asserting that former US president Andrew Johnson graduated from university in 2005, despite having died in 1875.

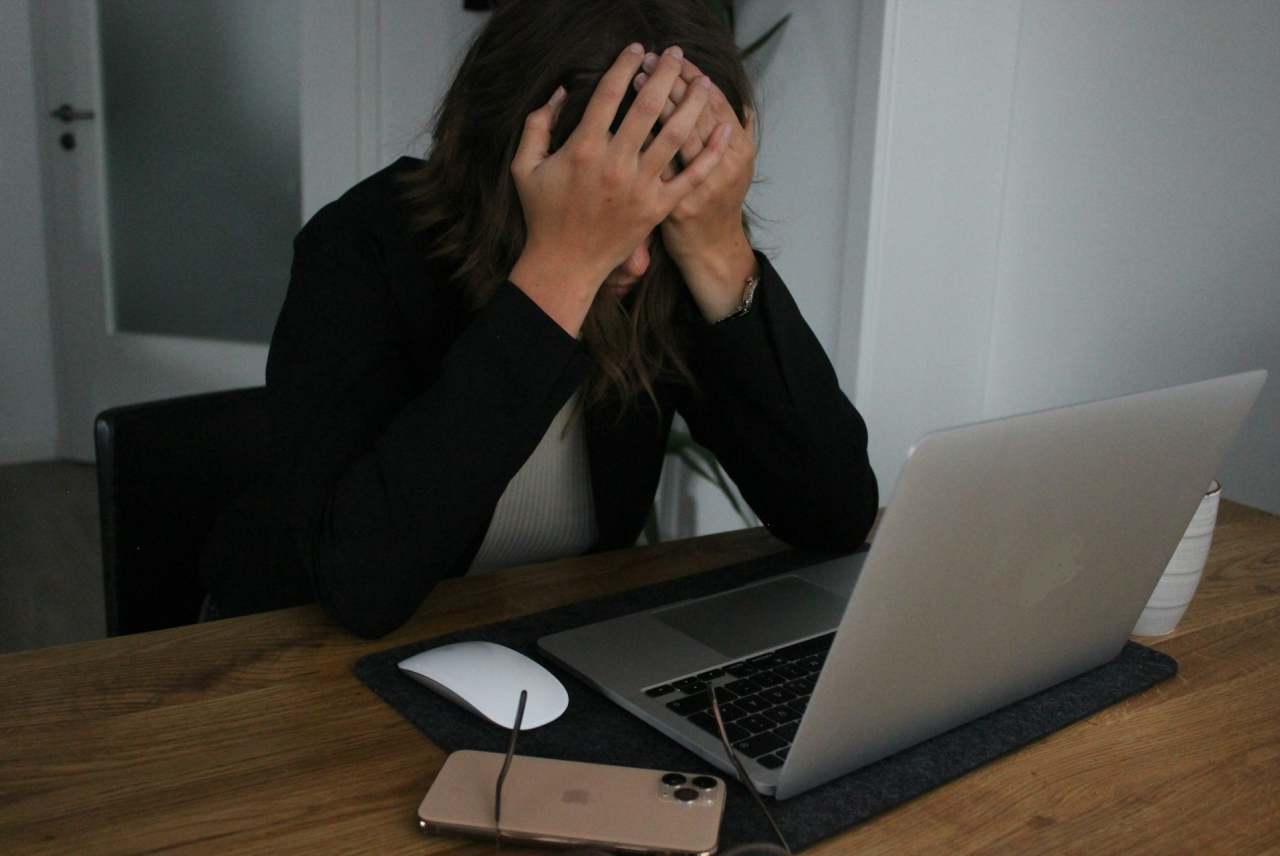

Many of these examples are humorous, but others are deeply concerning. For a time, Google’s AI Overview falsely stated that Ethical Consumer had founded Palestine Action, the direct action campaign group targeting weapons manufacturers and financiers. The claim was entirely hallucinated – there has never been any formal affiliation between us and Palestine Action. Given the government's contentious proscription of the group as a terrorist organisation which can see people arrested for expressing support, this kind of false claim could leave readers worried about whether they can safely support our work, too.

A March 2025 study by researchers at the Tow Center for Digital Journalism found that AI search tools readily produced fake results rather than admitting they couldn’t locate the correct information, and that they frequently fabricated links instead of linking to the original source. Interestingly, they also found that “premium” paid services were little better, providing “more confidently incorrect answers than their free counterparts,” according to the researchers.

Google applies regular fine tuning and updates, and appears at pains to demonstrate its model’s ability to answer ever more complex, lengthy, and multimodal questions. However, should a general improvement trend really merit our trust? An information provider which is correct 97% of the time is perhaps more dangerous than one that recommends glue pizza – at least we know to approach the latter with ridicule.

But it's easy to envisage skepticism melting into blind trust as summaries generally improve, leaving users vulnerable to perhaps less obvious misinformation.

Will search summaries get better?

There is also no guarantee that accuracy will increase. The idea of “AI model collapse” has been gaining ground in recent months. The large language models (LLMs) which power AI search models were initially trained on books, papers, articles and more – sources which were composed by human beings. But models now use real time sources, effectively searching the web rather than relying on pre-trained data.

In theory, this means that they’ll continually be fed with fresh, human-written knowledge. But, as anyone who has been online in 2025 will attest, the internet is flooded by AI-generated slop. The more that humans rely on AI to generate content, the more that AI algorithms will train themselves on other content generated by AI.

It’s hard to see this ending well. Veteran tech journalist Steven Vaughn-Nichols has discussed how AI systems, when trained on their own outputs, would gradually lose accuracy, diversity, and reliability – meaning that models may already be caught in a slow motion car crash.

Do we really want our search queries to be answered by AI summaries which are themselves citing half-baked, AI-generated blogs? Articles, arguments, and data could all be chewed up and regurgitated by thousands of models before they end up in your search summary – the original, human-composed wisdom long-lost at the end of the chain. As a 2024 Nature paper states: "The model becomes poisoned with its own projection of reality."